Data Cleaning Steps in NLP using Python

Data Cleaning Techniques For NLP related Problems

Data Preprocessing is an important concept in any machine learning problem, especially when dealing with text-based statements in Natural Language Processing (NLP). In this tutorial, you will learn how to clean the text data using Python to make some meaning out of it. Text can contain words such as punctuations, stop words, special characters or symbols which makes it harder to work with data. In the below example you will be learning about Sentiment Analysis using Python.

The ideal way to start with any machine learning problem is first to understand the data, clean the data then apply algorithms to achieve better accuracy. Import the python libraries such as pandas to store the data into the dataframe. We can use matplotlib and seaborn for better data analysis using visualization methods.

Import pandas as pd

train_df = read.csv("myfile.csv")

print(train_df.head())

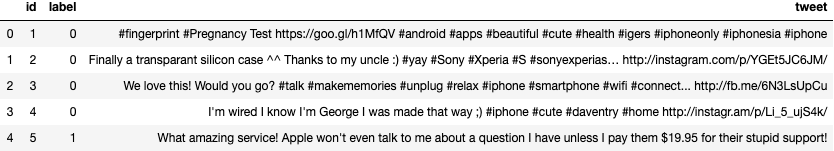

The dataframe has 3 columns id, label and tweet. When dealing with the text analysis process, the preprocessing step should be done for the column ‘tweet’ because we are concerned only about tweets. The dependent variable is ‘label’ column which gives tweet sentiment as 0 (Positive) and 1 (Negative). As you would have noticed in the above output, special characters like ^^, #, :), http:// is not useful to predict the sentiment of the reviews.

print(train_df.shape) print(train_df.info()) print(train_df.describe())

train_df.shape(): It gives the shape of the entire dataframe (7920 rows and 3 columns)

train_df.info(): It returns the information about the dataframe including data type of rows and columns, non-null values and memory usage.

train_df.describe(): Summary of statistical terms such as mean, standard deviation, distribution of data excluding NaN values.

Data Preprocessing/Data Cleaning using Python:

Using Regex to clean data

The best and fastest way to clean data in python is the regex method. This way you need don’t have to import any additional libraries. Python has an inbuilt regex library which comes with any python version.

import re

def clean_text(text):

text = re.sub('((www\.[^\s]+)|(https?://[^\s]+))', "", text)

text = re.sub('[!"#$%&\'()*+,-./:;<=>?@[\]^_`{|}~]', "", text)

text = re.sub("\d+", "", text)

return text

line 4: Remove special html characters such as website link, http/https/www

line 5: Remove Punctuations special characters such as #, $, %

line 6: Remove any numerical values present in the dataset

Removing Stop Words:

Words like a, an, but, we, I, do etc are known as stop words. The reason we don’t consider the usage of stop words in our dataset because it increases the training time as well doesn’t add any unique value. There are close to 800+ stop words in the English dictionary. However, sometimes words like Yes/No plays a role when dealing with problems such as sentiment analysis or reviews. Let’s discuss how to handle stop words in NLP problems.

Using NLTK library

from nltk.corpus import stopwords

stops = set(stopwords.words('english'))

text = ['Dsfor','is','known','as','data','science','for','beginners']

for word in text:

if word not in stops:

print(word)

Dsfor

known

data

science

begineers

There are other libraries such as Keras, Spacy etc which also supports stop words corpus definition by default. Once we are done with the data cleaning make sure we perform the Tokenization on the dataset. Tokenization splits the sentences into small pieces aka a Token. The token size can a single word or number or it can also be a sentence.

Tokenization in NLP

from nltk.tokenize import sent_tokenize, word_tokenize text = "Natural Language Processing is a branch of artificial intelligence that helps computers read, interpret, and understand human language" print(sent_tokenize(text)) print(word_tokenize(text))

['Natural Language Processing is a branch of artificial intelligence']

['Natural', 'Language', 'Processing', 'is', 'a', 'branch', 'of', 'artificial', 'intelligence']

Once we have done with the Tokenization method we can continue with our data cleaning part with the below methods for better results. NTLK library supports most of these methods as functions.

- Removal of Numerical/Alpha-Numeric words

- Stemming / Lemmatization (Finding the root word)

- Part of Speech (POS) Tagging

- Create bi-grams or tri-grams model

- Dealing with Typos

- Splitting the attached words (eg: WeCanSplitThisSentence)

- Spelling and Grammer Correction

After we are done with Data cleaning and Tokenization part, we can go ahead and apply some machine learning or deep learning models for better results. We will discuss more NLP techniques and methods in our next post.

Terminology Alert:

Corpus: Corpus is a collection of documents or text

Tokenization: Breaking words, sentences into tokens