MLFlow: Track & Log Model Parameters With Example

In this MLFlow tutorial guide, you will learn about the basic modules and functionality of mlflow components. If you are someone who is working on machine learning or deep learning models for quite some time then you must have come across certain challenges were tracking your model performance, distinguishing different model performance are often challenging. Mlflow is the savior, developed by the Databricks team to help manage the data science projects lifecycle.

What is Mlflow ?

MLFlow is a tracking tool for Machine Learning or deep learning models to track your model performance, experiments, and used for deployments. Mlflow has in-built integrations with machine learning top libraries such as Tensorflow, Pyspark, Sklearn, and many more to track your model performance. It also supports deployment frameworks such as Docker and Kubernetes. It is highly secured, scalable, and supports cloud vendors like Azure, AWS, and GCP with built-in integration with docker containers.

MLOps Pipeline With Mlflow:

Mlflow enforces MLOps (Machine Learning + Dev + Ops)

Using Mlflow Machine learning models can be managed/ tracked more efficiently and have production reliably. MLOps is the method of delivering data science projects through repeatable and efficient workflows.

Advantages of Using Mlflow:

- Speeds up time for Production

- Enforces Automation & monitoring at every stage (integration, testing, release etc)

- Allows to detect bugs at early stage

- Compare your models and experiments with no extra effort

The basic life cycle of Machine learning projects are:

1. Development: Can be used for Exploratory Data Analysis (EDA), Feature engineering, Model Evaluation & Selection, validation, and Training/ Testing.

2. Deployment: Used for project management, Scalability, Batch vs Real-time data processing.

3. Operations: Monitoring model performance, Debugging, Alert, Resource Management.

4. Delivery: Dashboards, User Interfaces, Notifications & Recommendations.

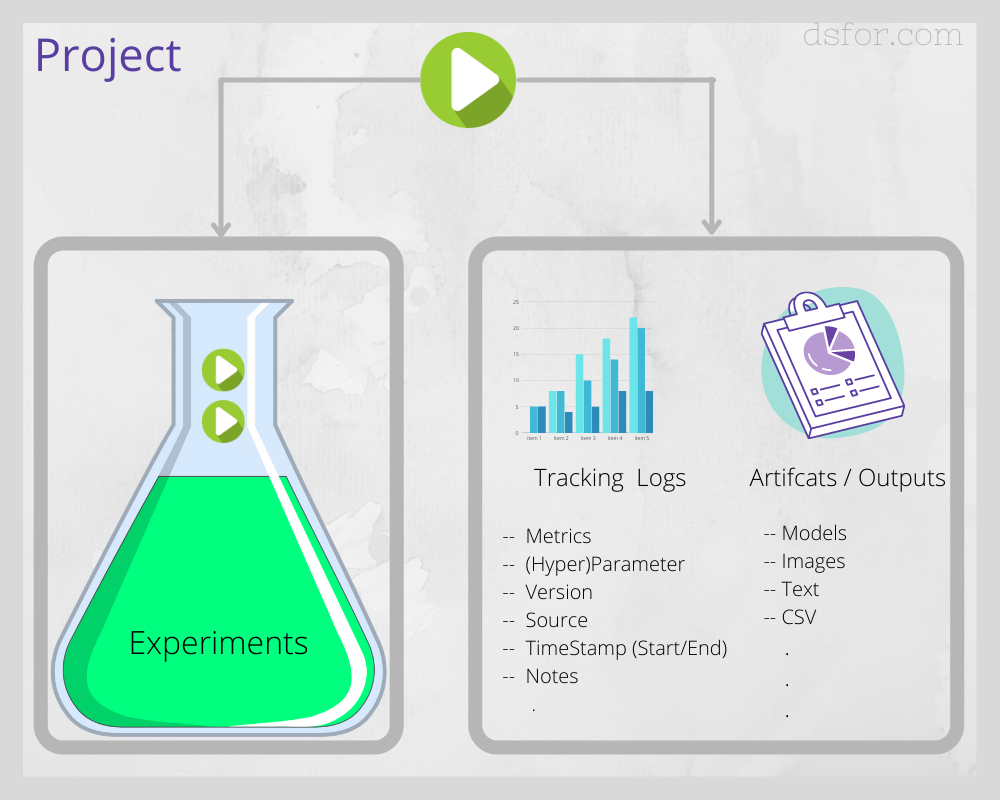

What does MLFlow Tracks?

- Parameters: Hyperparameters such as n_estimators, max_depth (Random Forest), alpha, solver (Regression Models), Kernel_size, drop_outs, epochs (Deep learning models)

- Metrics: AUC ROC, Accuracy, Precision, MAE, RMSE

- Data: version of the model

- Artifacts: Model binary outputs (Pickle/ HDF5), Other Outputs (images, text, csv, json)

- source: Trace where the code was run

- tag/commitments: Individual / Team annotations

In addition, MLflow runs can be recorded to local files, to an SQLAlchemy compatible database, or remotely to a tracking mlflow server. Mlflow tool can help to organize, log, store, compare and query all your MLOps metadata.

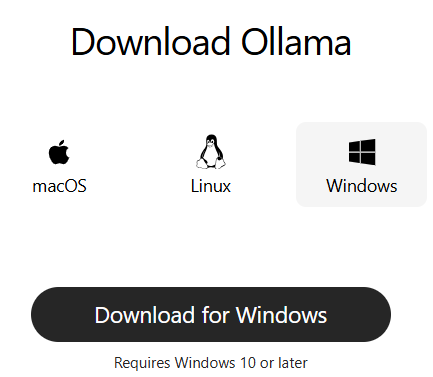

How to install MLFlow

# Install Mlflow pip install mlflow

After you install MLflow successfully you must be able to see mlruns folder at your present working directory. In addition, you can change the directory location in meta.yaml file: the artifact_location: ./mlruns/0

Simple Machine Learning Model (Regression example)

Let’s create our first simple machine learning model, once we train the model we will log the parameters, metrics, and model to mlruns folder. The regression model is trained on small diabetes datasets.

from sklearn.datasets import load_diabetes

from sklearn.model_selection import train_test_split

from sklearn.metrics import r2_score, mean_squared_error

from sklearn.linear_model import LinearRegression

# load the diabetes dataset

ds = load_diabetes()

X, y = ds.data, ds.target

# train test and split the dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=136)

# lets train our dataset

from sklearn.ensemble import RandomForestClassifier

# Running Random Forest Algorithm

rf_clf = LinearRegression()

rf_clf.fit(X_train, y_train)

y_pred = rf_clf.predict(X_test)

print('R2 Score: ', r2_score(y_test, y_pred))

print('MSE: ', mean_squared_error(y_test, y_pred))

R2 Score: 0.5079136639649233

MSE: 2686.174339813467Source Code: https://github.com/ajju23/mlflow.git

In the above simple regression example for diabetes patients, we got the R-square value and MSE value. We can change these values every time and record all the models with source code in each run. But before that let’s do try to understand basic configurations and functions.

MLflow logging Functions

mlflow.set_tracking_uri(): Tracking URI allows to save model and artifacts to local path, remote server, or database storage using a connection string. The URI defaults to mlruns.

mlflow.create_experiment(): You can create multiple experiment, this returns an integer value which can be used inside mlflow.start_run(experiment_id) as parameter.

mlflow.start_run(): Returns the current active run

mlflow.log_param(): logs values as dictionary format key-value pair. You can log multiple parameters at once using mlflow.log_params() function.

mlflow.log_metric(): logs values as dictionary format key-value pair. You can log multiple parameters at once using mlflow.log_metrics() function.

mlflow.log_artifact(): Logs any file such images, text, json, csv and other formats in the artifact directory.

Mlflow Example

This model solves a regression model where the loss function is the linear least-squares function and regularization is given by the l2-norm. Below is the source code for mlflow example:

from sklearn.datasets import load_diabetes

from sklearn.model_selection import train_test_split

from sklearn.metrics import r2_score, mean_squared_error

from sklearn.linear_model import Ridge

# Importing MLflow library

import mlflow

# set tracking uri

mlflow.set_tracking_uri('/Users/ajay/PycharmProjects/MLflow/mlruns')

# set expriment ID

mlflow.set

# load the diabetes dataset

ds = load_diabetes()

X, y = ds.data, ds.target

# train test and split the dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=136)

# define parameters

alpha = 1

solver = 'svd'

# Tracking model parameters

with mlflow.start_run():

# Running Random Forest Algorithm

lr = Ridge(alpha=alpha, solver=solver)

lr.fit(X_train, y_train)

y_pred = lr.predict(X_test)

# log parameters

mlflow.log_param('alpha', alpha)

mlflow.log_param('solver', solver)

# Logging Metrics

mlflow.log_metric('R2 Score', r2_score(y_test, y_pred))

mlflow.log_metric('MSE', mean_squared_error(y_test, y_pred))

# Logging Model

mlflow.sklearn.log_model(lr,'Regression Model')

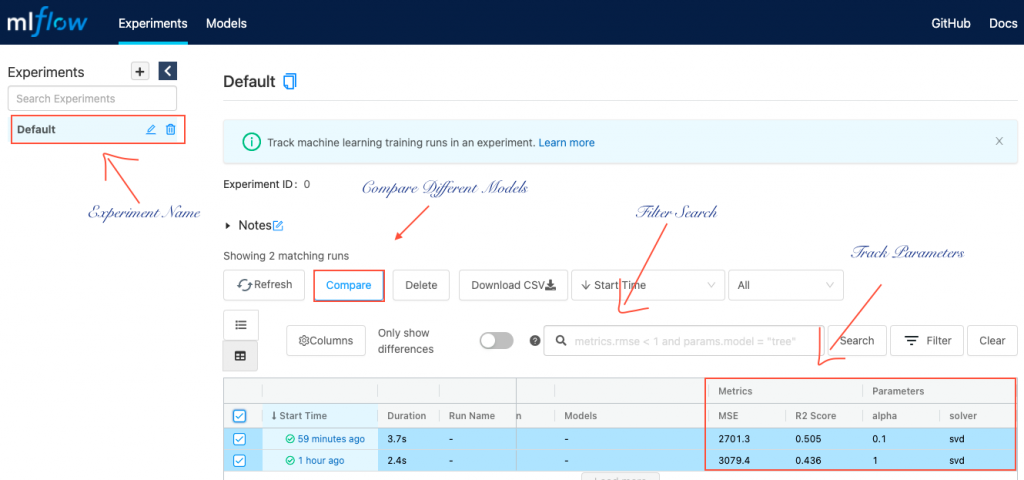

After running the above code, type mlflow ui in your terminal, the command will launch mlflow UI over localhost http://127.0.0.1:5000. To host it over different ports you can use -p with the different port numbers.

Log Multiple Parameters using Mlflow:

You can log multiple parameters at once by running for loop inside mlfow.start_run() context manager. You can also create a list of parameters and loop through the values to log the different parameters.

with mlflow.start_run():

for val in range(0, 10):

mlflow.log_metric(value=2*val)Load Model To Make Prediction

After running your model over multiple algorithms and parameters, it becomes easier for the data scientist to decide which algorithm performance is better or what hyperparameters to choose. You can register your model over a remote server or choose run ID from the artifacts folder. Below is the code snippet to make predictions for unseen data by loading the model.

import mlflow logged_model = 'runs:/c50ae6f1c21149f38bd69353bc1debb9/model' # Load model as a PyFuncModel. loaded_model = mlflow.pyfunc.load_model(logged_model) # Predict import pandas as pd loaded_model.predict(pd.DataFrame(data))

What’s Next:

MLflow comes out to be a very powerful tool for data scientists or data science teams working with big or small datasets. The tool makes it easier with tracking, monitoring, logging, and building scalable machine learning applications. Not just machine learning algorithms, Deep learning libraries Tensorflow, Pytorch are fully supported with this tool.

FAQs: Mlfow Tool Related Questions

What are the alternatives to Mlflow ?

Mlflow is quite popular in the last few years among the open-source community. It’s easy to set up and you can track your model performance in a few minutes. However, there are some other Alternatives to MLFlow available as are Tensorflow Extended, Kubeflow, Tensorboard, Neptune.ai

Machine learning libraries supported by mlflow ?

Mlflow supports many machine learning and deep learning libraries such as Gluon, H2O, Keras, Prophet, PyTorch, XGBoost, LightGBM, Statsmodels, Glmnet (R), SpaCy, Fastai, SHAP, Prophet, scikit-learn, TensorFlow, RAPIDS

Does mlflow support big data?

Yes, mlflow can take input and write the output back 100 TB of data to distributed storage systems such as AWS S3, DBFS.

What are the use cases of mlflow?

Mlflow can be used either by individual data scientists or by data science teams. mlflow server allows multiple users to track, log results and compare the model performance.